Data Quality

The most successful organizations rely on using data-driven insights to inform their most important decisions. But the insights you can extract out of your data are only as good as the data itself. That’s why data quality is so important — it can help ensure that every decision you make is backed by trusted, proven fact, rather than gut feel or instinct.

Augustine G

1/11/20255 min read

Data Quality Story Time

A 70 year old man mistakenly circumcised

Here's a funny but unfortunate story from Leicester Hospital. A 70-year-old man was scheduled to have a cystoscopy procedure. However, due to a mix-up, he ended up being circumcised instead. The reason for the mistake was surprisingly simple: the elderly man shared the exact same name as a younger patient who was supposed to undergo circumcision.

The 70-year-old was understandably devastated by the error, and the hospital faced significant consequences for the mishap. As a result, they were forced to compensate him with $24,000 for the mistake. Quite a mix-up for such a straightforward procedure!

Another interesting case emerged involving a young man named Liam Thorp, who was mistakenly informed that he qualified for a COVID-19 vaccination due to an error in his recorded height. Instead of being listed at 6 feet 2 inches tall, his height was registered as just 6.2 centimeters, which, in turn, led to a highly inaccurate body mass index (BMI) calculation of 28,000. This error suggested that Liam was one of the shortest individuals on record and an extremely overweight for a human. Liam humorously responded to the situation, acknowledging his weight of 17-and-a-half stone and joking about how combining that with his erroneously short height produced the outlandish BMI figure.

Accuracy

Data Accuracy describes the degree to which data describes the real-world phenomena it is intended to represent.

Assessing accuracy depends on the context, methodology and the validity of underlying hypotheses or assumptions. In public sector organizations, keeping data accurate can mean making sure that the data collected when providing services matches the information clients shared. To ensure accurate data for policy and program initiatives, users often have to check the data against trusted sources and evaluate how the data was collected and processed in the first place.

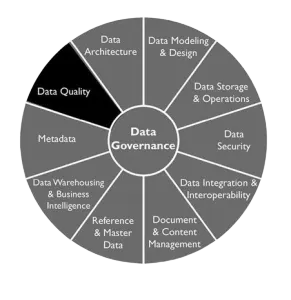

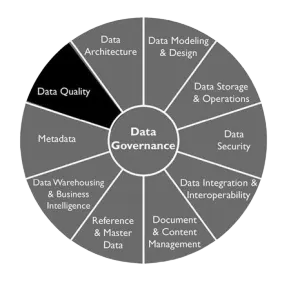

Data Quality Management

In the realm of data management, ensuring high data quality is paramount for enterprises aiming to drive informed decision-making. A robust data quality framework serves as a structured approach to maintain and enhance data integrity across all levels of an organization. This framework not only addresses the challenges with data errors, inconsistencies, and redundancies but also promotes adherence to established data standards.

Data Quality Dimensions

A comprehensive data quality framework comprises several key elements that collectively ensure the continuous enhancement of data quality across the enterprise. In general there are six common data quality dimensions.

Consistency

Ensures that data from multiple sources or places does not conflict.

Example: A student’s birthdate is recorded as “05/10/2005” in both the school register and the transfer documents from a previous school. If these match, the data is consistent.

Timeliness

Measures whether data arrives within the expected or desired timeframe.

Example: A nurse submits a change of address on March 1, but the update is entered into the hospital database on March 3. The expected time was within two days, but the entry was one day late, showing a timeliness issue.`

Validity

Ensures data conforms to predefined business rules or standards.

Example: An employee’s ID should follow a format like "LastnameDateOfHireJobCode" (e.g., "Blake12/21JA"). If a typo causes the ID to read "Blake12/21JS", the ID becomes invalid and would need correction for validity.

Uniqueness

Ensures that each record is stored only once, without duplication.

Example: A school’s database shows 108 students, but the actual number is 100. The extra 8 records are duplicates, which could cause confusion if only some are updated while others are not.

Completeness

Completeness describes the degree to which data values are sufficiently populated.

Data can be considered complete when it has the entries that users need to use it appropriately. This includes both the dataset and additional information that helps users understand the dataset in their specific businesses.

Ensures all required data is present and there is no missing information.

Example: In a membership form, if 3 out of 100 forms are missing addresses, the address data would be 97% complete.

Data Profiling:

Reviews data for patterns, inconsistencies, and errors.

Categories: Structure, Content, and Relationship Discovery.

Data Quality Tools and Solutions

Data quality tools play a crucial role in enhancing the reliability and manageability of data. Inaccurate data can lead to poor decision-making, missed opportunities, and reduced profitability. As cloud adoption continues to grow and evolve, ensuring high data quality has become increasingly vital. When implemented effectively, data quality tools address the root causes of these issues, helping organizations maintain accurate and actionable data.

Data Cleansing:

Corrects inaccurate, incomplete, or duplicated data.

Ensures consistency for decision-making and business operations.

Tools: OpenRefine, Trifacta Wrangle, Drake.

Data Enrichment:

Merges first-party data with third-party sources for deeper insights.

Privacy concerns may limit use (e.g., GDPR).

Tools: Crunchbase, InsideView, Clearbit.

Real-Time Email Data Validation:

Verifies email addresses to ensure valid email marketing.

Prevents bounce rates and improves deliverability.

Tools: ZeroBounce, Mailfloss, MailerLite.

Big Data:

Handles large, often unstructured datasets.

Identifies patterns and insights to support decision-making.

Tools: Zoho Analytics, Splunk.

Enterprise Data Quality:

Maintains and organizes data across business applications.

Ensures accuracy, relevance, and data validation.

Tools: Oracle, Uniserv.

Data Transformation:

Extracts and converts data into usable formats for business systems.

Essential for data integration and management.

Tools: Cleo Integration Cloud, IBM DataStage, Informatica.

Choosing the Right Tools:

Consider business needs, pricing, usability, support, and size.

Smaller businesses may need fewer tools; larger organizations require specialized solutions.

Tackling Common Data Quality Issues

Lets start with a real life story: The Case of the Mars Climate Orbiter

In 1999, NASA launched the Mars Climate Orbiter, a probe designed to study the Martian climate and atmosphere. However, the mission ended in disaster when the spacecraft disintegrated in Mars' atmosphere. The cause? A simple yet catastrophic data quality issue: a unit conversion error.

One engineering team used metric units, while another used imperial units. This discrepancy led to the spacecraft being on the wrong trajectory, ultimately resulting in a $327.6 million failure. This incident serves as a stark reminder of how even minor data quality issues can have monumental consequences.

Duplicate Data

Ever feel like you're seeing double? Duplicate data can clutter your database and create confusion. In an OLAP or OLTP environment if records gets duplicated every time this would easily inflate data and erodes trust with partners

Common Data Quality Issues

Inaccurate Data

Incorrect information leads to flawed insights and poor decision making. Typographical errors in names or addresses that result in incorrect information being recorded.

Incomplete Data

Missing values can paint an incomplete picture, and lead to gaps in analysis. Example include missing last names, emails etc. Trying to form a holistic picture on customer analysis becomes really hard

Invalid Data

An age field contains negative numbers or extremely high values that aren't realistic.

Outdated Data

Customer contact information that hasn't been updated for several years, leading to communication issues.

Inconsistent Data

Variations in data formats can lead to misinterpretations. And possibly NASA Type errors of 1999 briefly narrated above.

Irrelevant Data

Irrelevant data that doesn't serve any purpose should be archived and placed in cold storage. Keeping such data in data warehouse or data Lakehouse for example will just eat up space and rack up storage costs over time

Hidden Data

Hidden Data that is not easily accessible can result in missed opportunities.

Redundant Data

Unnecessary replication of data fields across multiple tables, leading to storage inefficiencies.